ThompsonSamplingPolicy works by maintaining a prior on the the mean rewards of its arms.

In this, it follows a beta-binomial model with parameters alpha and beta, sampling values

for each arm from its prior and picking the arm with the highest value.

When an arm is pulled and a Bernoulli reward is observed, it modifies the prior based on the reward.

This procedure is repeated for the next arm pull.

Usage

policy <- ThompsonSamplingPolicy(alpha = 1, beta = 1)

Arguments

alphainteger, a natural number N>0 - first parameter of the Beta distribution

betainteger, a natural number N>0 - second parameter of the Beta distribution

Methods

new(alpha = 1, beta = 1)Generates a new ThompsonSamplingPolicy object.

Arguments are defined in the Argument section above.

set_parameters()each policy needs to assign the parameters it wants to keep track of

to list self$theta_to_arms that has to be defined in set_parameters()'s body.

The parameters defined here can later be accessed by arm index in the following way:

theta[[index_of_arm]]$parameter_name

get_action(context)here, a policy decides which arm to choose, based on the current values of its parameters and, potentially, the current context.

set_reward(reward, context)in set_reward(reward, context), a policy updates its parameter values

based on the reward received, and, potentially, the current context.

References

Thompson, W. R. (1933). On the likelihood that one unknown probability exceeds another in view of the evidence of two samples. Biometrika, 25(3/4), 285-294.

Chapelle, O., & Li, L. (2011). An empirical evaluation of thompson sampling. In Advances in neural information processing systems (pp. 2249-2257).

Agrawal, S., & Goyal, N. (2013, February). Thompson sampling for contextual bandits with linear payoffs. In International Conference on Machine Learning (pp. 127-135).b

See also

Core contextual classes: Bandit, Policy, Simulator,

Agent, History, Plot

Bandit subclass examples: BasicBernoulliBandit, ContextualLogitBandit,

OfflineReplayEvaluatorBandit

Policy subclass examples: EpsilonGreedyPolicy, ContextualLinTSPolicy

Examples

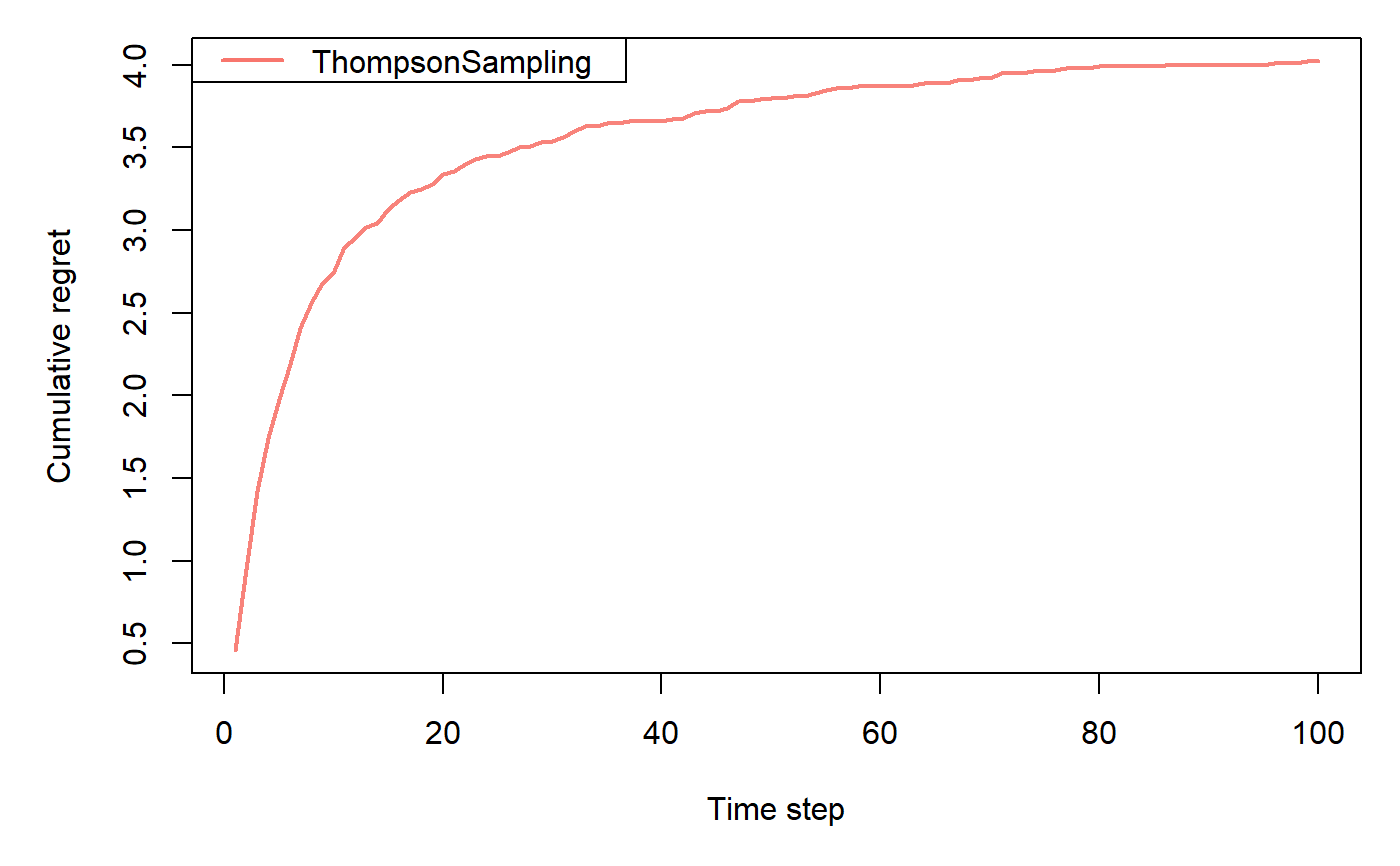

horizon <- 100L simulations <- 100L weights <- c(0.9, 0.1, 0.1) policy <- ThompsonSamplingPolicy$new(alpha = 1, beta = 1) bandit <- BasicBernoulliBandit$new(weights = weights) agent <- Agent$new(policy, bandit) history <- Simulator$new(agent, horizon, simulations, do_parallel = FALSE)$run()#>#>#>#>#>#>#>